An EDF analysis of carbon credits for rice growers shows great climate and cost-savings potential, but is that enough for farmers to participate?

In 2015, rice became the first crop for which agricultural carbon credits were valid for compliance in the California cap-and-trade system. Unfortunately, as of September 2020, no compliance credits have been generated. A newly released report by EDF explores the reasons why.

In the U.S., agricultural greenhouse gas emissions comprise approximately 10% of the economy-wide total emissions. The share of emissions from agriculture is larger for non-CO2 GHGs, making up approximately 78% of the U.S. total for nitrous oxide and 38% for methane.

Policymakers are eager to find mitigation opportunities in the agriculture sector, best evidenced by the bipartisan Growing Climate Solutions Act, which seeks to enable voluntary credit markets for producers to mitigate climate change.

As both policymakers and producers eye the potential of the agriculture sector to grow climate solutions, it’s worth taking a closer look at both the opportunities and the challenges that must first be addressed to tap this potential.

A case study of carbon credits for rice

EDF’s work on agricultural carbon credits began in earnest in 2007 after receiving the first of several U.S. Department of Agriculture grants to investigate how to bring agricultural emissions reduction credits to market. The objective was to design crediting systems that achieve the dual benefits of reducing GHG emissions while also providing meaningful revenue opportunities to landowners.

An EDF discussion paper summarizes some of the underlying analytics of these efforts for a series of crops and geographies. One specific example from the paper — rice in California — highlights both the carbon- and cost-saving opportunities associated with conservation practices like bailing and drainage, and the challenges associated with agricultural credits as a viable abatement measure.

The opportunity: Lowering costs and emissions

Rice is a GHG-intensive crop. It emits twice the amount of emissions per calorie as wheat, three times that of maize, and accounts for 5-20% of global methane emissions. EDF’s research focused on the nation’s two most intensive rice production regions — California’s Sacramento Valley and Mid-Southern U.S. These regions produce 26% and 72% of the domestic rice supply, respectively.

Our analysis began by using a biogeochemical model, DeNitrification-DeComposition (DNDC), to assess the abatement potential for current (baseline) practices and other lower-GHG alternatives in the California rice region. This led our scientists to discover a fairly large overall mitigation potential of more than 0.6 MMt-CO2e-100/year, or approximately 15%, of overall California rice emissions.

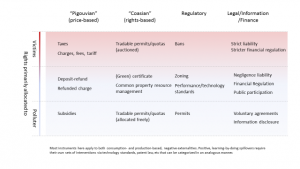

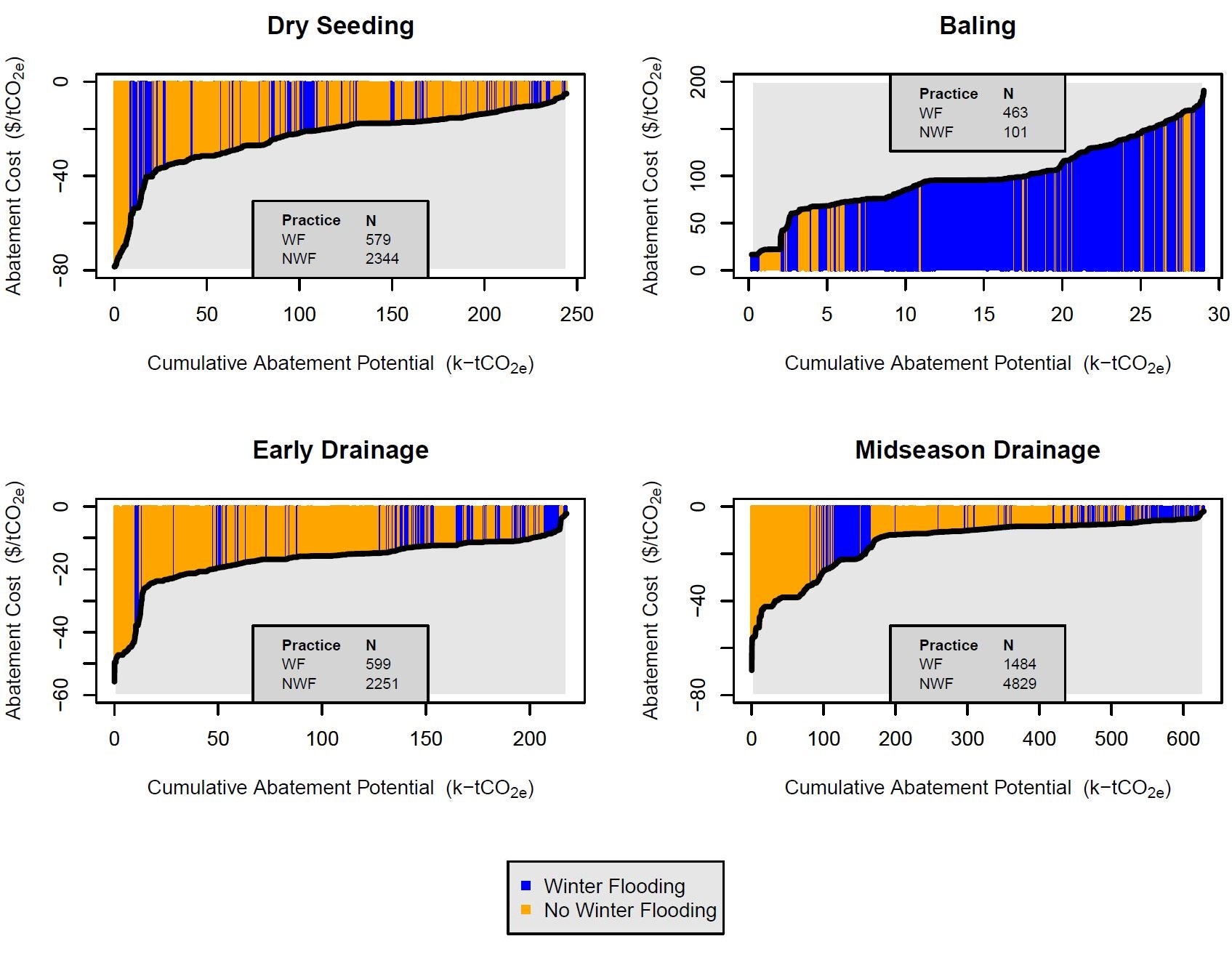

We then developed estimates of abatement costs by practice through cost budgets and consultation with agronomists. Combining this with the GHG modeling yielded the following marginal abatement cost curves (one for each practice).

Marginal Abatement Cost Curves for Rice Practices in California. Abbreviations: N (number of fields); WF (winter flooding of rice paddies); NWF (no winter flooding). WF/NWF practices follow a 60/40% distribution, historically, and play a role in determining the scale of achievable reductions.

These graphs illustrate that for all but one practice there are negative abatement costs with averages ranging from -$29.45/acre to -$0.45/acre, suggesting potential savings for farmers from implementing practice changes. For yields, the DNDC model projected that yields would remain relatively unchanged, aside from dry seeding, for which growers would experience an average 4.5% decrease.

These findings show great promise in terms of GHG abatement potential and cost savings for producers with minimal yield impacts (dry seeding aside). So, why aren’t growers already pursuing these practices? What barriers are getting in the way?

Three key barriers to entry

Our analysis identified a few potential barriers for farmers to generate carbon credits.

- Weak price signals

Understanding why growers are passing up potential cost savings from practices that reduce GHG emissions requires a closer look at farm economics. Adam Jaffe offers a useful typology for the various barriers to adoption, some of which I have identified below.

Putting the practice costs and yield impacts together, we can imagine a scenario where we have a carbon market in place and a carbon price of $10/ton (the California spot price at the time this work was carried out). In this instance, we’d find that with an average 0.7 ton/acre reduction, most rice growers would be looking at potential revenue from the market of approximately 0.5% of their overall crop sales revenue (typically $1,500/acre), or 2.6% of their net profit (approximately $250/acre), not including further potential gains from the negative abatement costs of certain practices and locations.

Unfortunately, in context of the overarching farm economics, this makes for a weak incentive.

If we now imagine a new scenario with a carbon price closer to today’s social cost of carbon ($42/ton), we find that the potential revenue from participating in the market rises closer to 2% of crop sales revenue and 11% of net profit. At this price, the incentive appears to be substantially more robust, which tells us that, from a social standpoint and with a strong price signal, the market could be viable. But as it currently stands, conditions are falling short of this potential.

- Large transaction costs

Another critical consideration for engaging in any market is transaction costs — for GHG markets in particular, monitoring, reporting and verification (MRV) costs.

Our analysis found transaction costs to be significant on a per-grower basis at approximately $14/acre for an average 1,000-acre California farm. At a market price of $10/ton, transaction costs are double the average expected return from carbon markets of $7/acre, providing a steep disincentive. Even with credits priced at the higher social cost of carbon ($42/ton), transaction costs would still equal nearly 50% of potential revenue, essentially cutting their expected financial gains in half.

Further economic modeling showed the importance of allowing a way to aggregate projects for MRV transations due to the very large third-party fees incurred to verify reductions. However, even if growers use aggregation as a means to cost-share, it will be critical to find ways to use technologies like remote sensing and automated data generation and analysis to streamline this process, realize savings and still guarantee accurate verification.

- Changing behavior is an obstacle in itself

Finally, behavioral factors represent a hurdle that cannot be ignored — the hidden additional cost of switching practices. This cost is difficult to quantify precisely, but we know from experience that behavior is hard to shift and farming practice changes typically require planning and close coordination with a number of consultants and business partners.

Understanding this, we performed a survey for corn and almond growers, asking how much participants in a carbon market would need to be paid to reduce fertilizer applications, and thereby decrease nitrous oxide emissions. To isolate the behavioral barriers, we designed the survey to encourage the farmers to assume no additional costs, risks or yield impacts.

Their responses ranged from $18-40/acre, when a representative farmer might only receive $7/acre in returns with a $10/t carbon price. This gap in the valuation likely represents factors such as personal or cultural values and aversion to risk and uncertainty that may be very difficult to overcome using market incentives alone[1].

Managing risk and risk perceptions is a challenge that must be addressed to see widespread uptake of mitigation practices.

Where do we go from here?

The agricultural sector has the potential to play a key role in contributing to national climate goals.

Crediting systems are just one tool to support this, but more research and pilot programs are needed to help overcome the barriers to entry, increase confidence in high-quality and cost-effective credits, and also evaluate and correct for potential inequities and injustices.

EDF is launching a new phase of research dedicated to this work, in addition to developing complementary finance and policy tools that correct for existing disincentives and inequities to create a more just and resilient food system.

With the right combination of tools in the toolbox, we can unleash the power of carbon markets to boost long-term resilience on the farm and beyond.

[1] It is important to note that all of the numbers depicted above represent averages, and there are certainly cases for which incentives are large at the individual level, and some growers may have zero or even negative switching costs, so many farmers have ripe potential for carbon market participation.