I’m from Aotearoa, New Zealand, and I really love its land and people, but I am fully aware that from a global perspective it appears pretty insignificant – that’s actually one of its charms. But being small doesn’t mean you can’t make big contributions including toward stabilizing the climate. This recently published article highlights some lessons New Zealand’s experience with emissions trading can offer other Emissions Trading System (ETS) designers at a time when effective climate action is ever more urgent.

Talking intensively to ETS practitioners and experts around the globe about their diverse choices and the reasons why they made them has made me acutely aware of the need to tailor every ETS to local conditions. In a complex, heterogeneous world facing an existential crisis, diversity in climate policy design makes us stronger and frankly, improves the odds that the young people we love will live in a world where they can thrive.

New Zealand created the second national emissions trading system in 2008, and the system established a number of firsts, some of which have been repeated widely, like output-based allocation of allowances to combat leakage risk. Others offer cautionary tales, like linking a small country’s emissions trading market to a large emissions trading market over which you have little control.

Simplicity helps make an ETS more manageable and effective

New Zealand’s small scale makes simplicity a key virtue. Our regulators are well educated, but there just aren’t many of them. This simplicity would also be a strength in a country with capability constraints, or where corruption is a problem and simplicity naturally increases transparency and reduces opportunities for manipulation.

Regulating fossil-fuel production and imports (that inevitably lead to predictable amounts of emissions – in the absence of effective carbon capture and storage) at the first point of commercialization, another first, made monitoring simple and, in the New Zealand context, minimized the number of regulated agents needed to cover almost 100% coverage of energy-related emissions including all domestic transport.

An ETS needs to match a country’s profile and culture

New Zealand’s small scale and our unusual emissions profile (around half our emissions are biological emissions from agriculture – cow burps and other unmentionables – and lots of land ripe for reforestation) led New Zealand to aim for an ‘all sources – all sectors’ coverage of emission pricing – and this worked well for our politics. In New Zealand ‘fairness’ is a critical cultural value. New Zealand’s ETS covers energy, transportation and industrial process emissions but also deforestation, reforestation, and fugitive emissions from fossil fuel production and landfill waste management. We are still working out how to cover those challenging cows in a way that allows rural communities to thrive – with the current intention being to regulate with emission pricing at the farm level starting in 2025.

The cultural value of fairness also led to a strong linkage between the motivations for free allocation and th methods chosen. Sectors and companies (sometimes pretty much the same thing in NZ) who lobbied for free allocation had to make a logical case that was publicly scrutinized. Lump-sum allocations were given as compensation to those who were losing the value of stranded assets – e.g. owners of pre-1990 forests, including Māori Iwi (tribes) who lost some of the value of forests they had recently received in Treaty settlements when deforestation began to attract carbon liabilities. Output-based allocation is still provided for industrial activities that are emissions intensive and trade exposed and therefore face a risk of leakage of these economic activities to other countries where climate policy is weaker. By effectively subsidizing the activities that might move, output-based allocation reduces that risk.

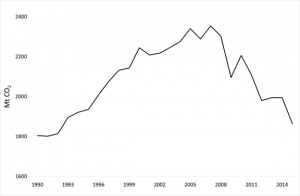

Political instability can negatively impact markets

Not all experiences have been positive however. As the report highlights, New Zealand’s ETS has suffered from a lack of policy stability and hence lack of emission price stability. This was partly because our emissions price was largely determined by international markets (from 2008 to mid-2015, New Zealand companies could buy and surrender unlimited amounts of international Kyoto units such as those from the Clean Development Mechanism and Joint Implementation). New Zealand’s emission prices bobbed like a cork on the international market. Another critical flaw: not embedding our ETS firmly in a long-term vision for low-emissions transformation and within a wider non-political institutional framework that gives predictability of purpose in the inevitable ETS evolutionary process.

The ability to guide, enable and incentivize dynamic efficiency (e.g. efficient low-emissions investment) has always been a key argument for emissions pricing, but, as a profession we economists have paid too little attention to the political and cultural stability that is critical to enable this. Policy makers need to regularly adapt and update policies. That process of policy evolution can help guide us efficiently through a low-emissions transformation, or, in the face of powerful vested interests and strong temptations to globally free-ride, it can open up repeated opportunities to undermine ambition.

New Zealand is now engaged in the next step of its ETS evolution, learning from others and through critical reflection on our own positive and negative experiences, but continuing to innovate and tailor ETS solutions to our own unusual circumstances. The direct impact on global emissions will be small whatever New Zealand does with its ETS, but the lessons and the example that even small yet significant countries can act and find new solutions, will hopefully help and inspire others.

Kia kaha Aotearoa