This post is by Tony Kreindler, Media Director for the National Climate Campaign at Environmental Defense Fund. It’s the fourth in a series on Why a Bill in 2008:

This post is by Tony Kreindler, Media Director for the National Climate Campaign at Environmental Defense Fund. It’s the fourth in a series on Why a Bill in 2008:

1. Same Politics in 2009

2. Good versus Perfect

3. The Price of Waiting

4. The World is Waiting

5. Best Answer to High Gas Prices

Why push for a climate bill in 2008? I’ve already given some important reasons in my previous posts: the politics will be much the same in 2009, we don’t want to squander the current momentum, and in any case, we simply can’t afford to wait.

But if those aren’t reason enough, here’s another: The world is waiting for us to act. To solve the global warming problem, China and other developing countries also must cap their emissions, and they won’t do this until our own cap is in place.

From a New York Times report:

China is not going to act in any sort of mandatory-control way until the United States does first," said Joseph Kruger, policy director for the National Commission on Energy Policy, a bipartisan group in Washington.

Along with India and other large developing countries, China has long maintained that the established industrial powers need to act first because they built their wealth largely by burning fossil fuels and adding to the atmosphere’s blanket of greenhouse gases.

If the U.S. – the wealthiest country on Earth – won’t establish a cap, then how can we expect developing countries to do it?

Global Negotiations Happening Now

Negotiations are underway already for a successor to the Kyoto Protocol (the international treaty to cap greenhouse gas emissions). All current Kyoto commitments expire in 2012. Some 190 countries met last December in Bali to begin the discussions. The plan is to approve a post-Kyoto framework at the 2009 meeting in Copenhagen. This gives countries the time needed to ratify and adopt implementing policies by 2012 so there’s no gap between the agreements.

The level of commitment that both developed and developing countries will agree to in Copenhagen depends heavily on the level of U.S. commitment, as reflected in our domestic policy. If the U.S. hasn’t adopted its own mandatory cap before the 2009 framework meeting, it could seriously hamper negotiations and jeopardize the chance for a global agreement in 2012.

Opportunities for U.S. Companies

A U.S. cap-and-trade system could provide developing countries with more than just incentive. The economic opportunities it would create for U.S. firms could help developing countries meet mandatory targets. It’s win-win. There are two main avenues by which U.S. firms could profit:

- Purchase low-cost emissions offsets in developing countries.

- Sell clean energy technology to developing countries.

The U.S. has a longstanding advantage in world markets for products and services that require high-technology, high-value-added, and complex engineering and industrial processes. These are exactly the types of energy efficiency and emissions reducing technologies needed to meet the challenge of a mandatory carbon cap. We can help developing countries leapfrog to a clean energy future.

But to remain at the forefront of these fields and sell into these markets, we need our own firm cap to spark the necessary innovation.

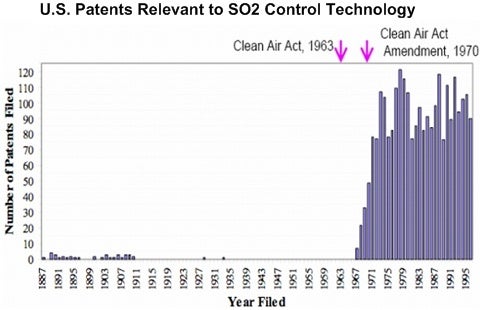

Research by Carnegie Mellon University showed that SO2-related patent filings spiked after the Clean Air Act [PDF] though the government had been supporting research for long before that. They conclude, "The existence of national government regulation stimulated inventive activity more than government research support alone."

Source: The Effect of Government Actions on Technological Innovation for SO2 Control [PDF]. The EPA/DOE/EPRI Mega Symposium, August 20-23, 2001.

Why is this so? A firm cap with no "safety valve", as in the Lieberman-Warner Climate Security Act of 2008, informs the market of the size of demand for emissions reductions and offsets, and informs potential investors of the likely demand for new technologies, alternative fuel sources, and offset projects. An unpredictably varying emissions target increases uncertainty for investors, and could adversely affect their access to investment capital.

So our taking the lead isn’t just about avoiding catastrophe; it’s also about significant economic opportunity. But to take advantage of the opportunity, we must act quickly. If we wait too long to enact climate legislation and spur the innovative power of our markets, we may find ourselves as buyers rather than sellers.

In my next and last post in this series, I’ll talk more about the huge commercial opportunity in the transition to clean energy technology, and what it would mean to win (or lose) this race.

2 Comments

This really isn’t a political issue. That’s why we shouldn’t do anything yet. I’ve been studying this issue as in impartial observer, and as an Engineer with a scientific/technical background, it appears to me that the “denialists” are correct. Man is not responsible for global warming, and global warming has peaked and is a blip in temperature starting about 100 years ago after the little ice age. The temp rise is highly connected with cloud formation caused by cosmic ray density, which is related to the sun’s magnetic activity and the dust we pass through in space as the galaxy rotates. See the paper by Zbigniew Jaworowski.

It seems very premature to be basing global, national, state, or local legislation on a theory that garners so much controversy. The continual assertion that science proves what the media has come to call “Global Warming” is a pretty strong statement and bears further inspection because there are no absolute proofs except in mathematics.

With that said, I am willing to go wherever the science leads, but cannot support jumping to conclusions based on “what-if” or “it-might-happen” scenarios. Based on the implications to our economy and personal freedoms, I imagine everyone would want to take a critical look at this issue before jumping on board. I took the time to summarize some of my thoughts in hopes it might help some people clarify their own analysis.

Proponents of Global Warming say:

The Earth is warming up due to anthropogenic green house gas emissions and this will cause dire climate consequences for humanity.

This statement can be broken down into three assertions:

1. The Earth is warming up abnormally

2. A warmer Earth will cause more catastrophic weather, famine, plagues, drought, etc… than that which already exists

3. Man is causing it

Reworded in the form of potentially testable questions:

1. Is the Earth abnormally warming up?

If the answer to #1 is yes:

2. Will a warmer Earth cause more catastrophic weather, famine, plagues, drougt, etc. than that which already exists?

3. Is man causing it?

Let’s explore each question individually:

Question 1: Is the Earth abnormally warming up?

Answer 1: Indeterminate. The answer depends on a great number of starting assumptions, answers to sub-questions, and the quality of available data.

Some of the sub-questions are:

* Compared to what?

* How far back in the geologic scale do we go for the comparison?

* Do we exclude climate anomalies from our comparison data that we “believe” are caused by one-time events or persistent climate factors that we think we understand(i.e. the ice age, jurassic period)?

* Can we accurately, with a low margin of error, determine historical temperature data?

* Can we merge historical data of unknown precision with data from recent history that is high precision and still achieve meaningful results?

* Does the Earth have a normal temperature?

* If so, how is it defined and who defined it?

* Is there a cyclical rythm to earth’s temperature?

* If so, does the current trend fall within the range of the current temperature cycle?

* Are there adequate quantities of data from enough geographically distributed locations to make vast, global generalizations about temperature?

* How detailed are the conclusions we can draw about historic temperature from the data? Can we tell temperature for a given day, month, year, decade, or century?

* How accurate are the different temperature proxies and how are the results averaged?

* etc…

These sub-questions form the methodology and assumptions section of a good scientific study. Regrettably, there seem to be two polarized camps with precious few in between. Neither camp seems to be totally intellectually honest but the climate scientists who are asserting there is a crisis seem particularly intent on shutting down debate and dogmattically defending their work. I find it particularly offensive when these climate scientists pull the “it’s too complex for you to understand” card. Since the entire Global Warming assertion, and its dire implications, rests on the answer to this one question it seems that our entire scientific community, of all disciplines, should be gladly invited to participate in the discussion so as to arrive at the best conclusion possible. Good science does not happen in a cloistered environment.

Ignoring the lack of consensus and assuming the answer is yes, the Earth is in an abnormal warming trend the next two questions need to be addressed.

Question 2: Will a warmer Earth cause more catastrophic weather, famine, plagues, drought, etc. than that which already exists?

Answer 2: Indeterminate. This is not directly testable or predictable.

* How does one average out the impacts of global catastrophe? Warming may be beneficial to one geographic area and catastrophic in another. There is anecdotal history that indicates the existence of a medieval warm period that gave birth to the European age of learning. The period is said to have been warmer than modern temperature trends by several degrees and it can be argued that this development was very good for Europe. However, whether it was “globally” good is another question altogether. Do we see other anecdotal historical data that indicates relatively “more” catastrophic weather during this timeperiod at other geographic locations?

Regardless of the anecdotal data, this question should really be broken out into its own hypothesis and treated as a separate study.

Global Warming Sub-Hypothesis 1 – There is a direct causative effect between global (not regional) temperature and catastrophic weather.

There are two steps to testing this hypothesis. First, future temperatures must be predicted. Second, the predicted temperatures must be used to predict whether or not catastrophic weather will happen. The accuracy of the first step dictates the accuracy of the second.

Disclaimer: There is a tremendous amount of background that goes into each aspect of this science, so a complete discussion is unreasonable and impossible in this forum. Unfortunately, this means that this discussion will contain vast generalizations which can readily be argued about. Please accept my apologies in advance.

What follows is a brief discussion on modeling systems using computational methods. I am an ardent supporter of these models when they are viewed in the proper context and I want to recognize the herculean effort that has gone into producing the current state of the art. I am in awe of how accurate these models have become and in no way want to impune the research and effort that went into creating them. However, computational models have limitations that place a boundary around specific conclusions that may be drawn from their results.

Since this hypothesis is not empirically testable, scientists must create computational climate modeling engines that use historical data to predict future results. These models are continually updated to more accurately account for the historical data and presumably become better over time.

However, a model is only as good as the reliability of the data going into it and the quality of its results over time. Every time a model is revised, the scientific community should “reset” acceptance of its results until predictive accuracy has been re-established. Note: Although methodology for testing a computational model is way outside the scope of this document, suffice it to say that successfully running historic data through the computation engine should not be construed as defining predictive accuracy.

As a software engineer who creates software models for a living, I can attest that models can be very accurate if the number of variables in the system being modeled are limited and the system being modeled is well understood. However, there is no way to completely model a very complex system due to both limitations on understanding of the problem domain and computational capability.

The trouble with the climate modeling problem domain is it requires exceptionally detailed knowledge in physical chemistry, biology, fluid dynamics, thermodynamics, heat transfer, geographic impacts on climate, computer numerical methods, and more. These are all highly specialized fields in their own right and exercise of each discipline in the highly interactive environment of a climate model increases their complexity significantly. Because of this, a climate model is necessarily also extremely complex, has many thousands of variables, and not all the mechanisms are fully understood (although a good many are). There is no way to completely model this system and anyone who argues that they have accomplished it is a liar.

A primary strength of a computational model is that it can run and re-run scenarios using different values for variables you are interested in. You can cause variables to change at certain rates, such as CO2 added to the atmosphere per year, and quickly see a predicted outcome. This repeatability is both a boon and bane. While you can quickly see results to paramater changes, you can’t always trust the results are accurate unless all of the foundational assumptions are met. If your model only works within a narrow range of parameters and the variable interactions aren’t captured accurately for conditions outside of those parameters, the model may return garbage results.

In order for a computational model to be useful, the resultant data set must accurately reflect reality within some specified margin of error. Accuracy could be measured by correct predictions of the quantity and intensity of hurricanes or the geographic boundaries, persistence and severity of drought conditions among other things. If the predictions aren’t terribly accurate, subsequent iterations of prediction will be less and less accurate. Small errors in the model’s predictive ability will most certainly add up to enormous errors over a time progression and seriously skew results.

An honest model will provide upper, lower, and most probable values for each result and then calculate the next predicted value based on these three numbers. A resultant graph of the data should have the appearance of a mean line bounded by lines diverging in either direction from the mean line. The diverging lines represent the increasing levels of uncertainty associated with the data. Obviously, the longer the progression continues, the less certain the data.

In short, while the models are a useful tool, they are highly dependent upon the quality of input data and accuracy of system variable interactions. The quality and interpretation of the historical input data is not agreed upon and the model of the climate variable interactions is incomplete (thus the use of fudge factors) so the model’s predictive ability is called into question. Again, the answer to the question is indeterminate (although climate scientists would vehemently argue this point).

Now, the question that dictates humanity’s response to the crisis.

Question 3: Are man’s activities causing this warming?

Answer 3: Indeterminate.

The answer to this question relies on computer modeling as well. The same model used above to predict temperature is used to “discover” if man’s predominant green house gas emissions are the cause for any abnormal warming of the Earth. It’s kind of a chicken and egg approach to computer modeling. In order to determine if man is the cause of global warming we must have a way to predict temperature in the climate based on man’s inputs to the environment. However, in order to predict temperature, the model takes into account greenhouse gas levels and assigns a relationship that we’re trying to discover if it exists (with a forcing value) to impacts on climate temperature where none existed before. In order to make the model match historical temperatures (we’ve already discussed the uncertainty around this data) these forcing values were “adjusted.” This is modeling by fudge factor based on a pre-decided outcome folks.

So what is the take home. Discounting the naysayers who say man is definitely not causing any and those that say man is definitely causing all of the global warming, we arrive somewhere in the middle. Man exibhits some impact but is not the predominant factor in determining Earth’s temperature. The following website goes into decidedly more detail in a more user friendly format than this:

http://www.weatherquestions.com/Roy-Spencer-on-global-warming.htm

Does this “crisis” warrant the Global Warming proponent’s solution – Using global, national, state, and local legislative means, place economically punitive and individual freedom stripping laws on the earth’s populace to “reduce their carbon footprint?”

The resounding answer is – NOT BASED ON THIS SCIENCE.